Artificial Intelligence (AI) is one of the most exciting and transformative technologies of our time. It has the potential to change the way we live, work, and interact with the world around us. However, AI wasn't always a part of our daily lives. Its origins lie in the realm of science fiction (sci-fi), where imaginative writers and filmmakers dreamed of machines that could think, learn, and act like humans. Over the decades, these fantastical ideas have gradually become a reality. This blog will take you on a journey through time, exploring how AI evolved from a concept in sci-fi to a real-world technology that is now deeply integrated into our lives. We'll look at the early days of AI in literature and film, the scientific breakthroughs that brought AI to life, and the many ways in which AI is used today. We'll also consider the future of AI and the ethical questions that come with such a powerful technology.

The Origins of AI in Science Fiction

What is Science Fiction?

Science fiction, or sci-fi, is a genre of literature and film that explores speculative ideas, often involving futuristic technology, space exploration, and the possibilities of life beyond Earth. Sci-fi allows writers and filmmakers to imagine worlds and technologies that do not yet exist, pushing the boundaries of what is possible. This genre often serves as a mirror, reflecting our hopes, fears, and questions about the future.

Early Depictions of AI

The concept of AI has fascinated people for centuries. The idea of creating machines that can think and act like humans can be traced back to ancient myths and legends. However, it wasn't until the rise of sci-fi in the 19th and 20th centuries that AI began to take shape in the popular imagination.

One of the earliest examples of AI in literature is Mary Shelley's "Frankenstein" (1818). While the story is not about a robot or a computer, it explores the idea of creating life through science. The creature in "Frankenstein" is brought to life by a scientist, Dr. Victor Frankenstein, who uses electricity and scientific knowledge. This story raised important questions about the ethical implications of creating life and the responsibilities of those who wield such power.

As technology advanced, so did the depictions of AI in sci-fi. In the 1927 film "Metropolis," directed by Fritz Lang, a robot named Maria is created to look and act like a human. This film was one of the first to explore the idea of robots replacing or imitating humans, a theme that would become central to many later AI stories.

The Golden Age of Sci-Fi and AI

The mid-20th century is often referred to as the "Golden Age" of science fiction. During this time, many iconic sci-fi stories and films were created, and AI became a recurring theme. Authors like Isaac Asimov, Arthur C. Clarke, and Philip K. Dick wrote stories that explored the possibilities and dangers of AI.

Isaac Asimov, in particular, made significant contributions to the way we think about AI. His "Robot" series, which includes stories like "I, Robot" (1950), introduced the idea of robots governed by ethical principles, known as the Three Laws of Robotics. These laws were designed to ensure that robots would not harm humans and would always obey human commands. Asimov's stories were not just about the technology itself but also about the moral and ethical questions surrounding AI.

Arthur C. Clarke's "2001: A Space Odyssey" (1968), which was also made into a film directed by Stanley Kubrick, featured one of the most famous AI characters in sci-fi: HAL 9000. HAL is a sentient computer that controls a spaceship and interacts with the crew. As the story progresses, HAL begins to make decisions that put the crew in danger, raising questions about the reliability and trustworthiness of AI.

These stories captured the imaginations of millions and laid the groundwork for the real-world development of AI. They showed us both the potential benefits and the possible dangers of creating machines that can think and act like humans.

The Birth of Real-World AI

The Dawn of AI Research

While sci-fi was exploring the possibilities of AI, real-world scientists were beginning to ask important questions about whether machines could actually think and learn. One of the pioneers of AI research was Alan Turing, a British mathematician and computer scientist. In 1950, Turing published a paper titled "Computing Machinery and Intelligence," in which he posed the question, "Can machines think?"

In his paper, Turing proposed a test to determine whether a machine could exhibit intelligent behaviour indistinguishable from that of a human. This test, now known as the Turing Test, involves a human judge who interacts with both a human and a machine through text. If the judge cannot reliably distinguish between the human and the machine, the machine is said to have passed the test.

Turing's work laid the foundation for the field of AI research. In the years that followed, researchers began developing early AI programs. One of the first was the Logic Theorist, created by Allen Newell and Herbert A. Simon in 1956. This program was designed to mimic human problem-solving abilities and could prove mathematical theorems.

The same year, the term "artificial intelligence" was coined at the Dartmouth Conference, organised by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. This conference is often considered the birth of AI as a formal field of study. The goal of AI, as defined by these early researchers, was to create machines that could perform tasks that would require intelligence if done by humans.

The Early Years: Rule-Based AI

The early years of AI research focused on creating programs that could solve problems using rules and logic. These programs were known as rule-based or symbolic AI. They relied on explicitly programmed rules to make decisions and solve problems.

One of the most famous examples of rule-based AI is the General Problem Solver (GPS), developed by Newell and Simon in 1957. GPS was designed to solve a wide range of problems by breaking them down into smaller steps and using a set of rules to guide its actions. While GPS was a significant achievement, it also highlighted the limitations of rule-based AI. These systems were only as good as the rules they were given and struggled with problems that required more flexible or creative thinking.

Another early AI program was ELIZA, created by Joseph Weizenbaum in 1966. ELIZA was a simple chatbot that could simulate a conversation with a human by using pattern matching and scripted responses. ELIZA was designed to mimic the behaviour of a psychotherapist, responding to user input with questions and statements that encouraged further conversation. While ELIZA was a relatively simple program, it demonstrated the potential for AI to interact with humans in a more natural and engaging way.

The Rise of Machine Learning

As researchers explored the limitations of rule-based AI, they began to look for new approaches that could make AI more flexible and capable of learning from experience. This led to the development of machine learning, a field of AI that focuses on creating algorithms that can learn from data.

Machine learning is based on the idea that instead of programming a machine with explicit rules, we can train it to recognise patterns in data and make decisions based on those patterns. One of the earliest successes in machine learning was the development of neural networks, which are models inspired by the structure of the human brain.

In the 1980s, researchers like Geoffrey Hinton, Yann LeCun, and Yoshua Bengio made significant advances in neural networks, leading to the development of deep learning, a subset of machine learning that uses multiple layers of neural networks to process complex data. Deep learning has become one of the most powerful tools in AI, enabling breakthroughs in areas like image recognition, natural language processing, and autonomous vehicles.

AI in the Real World

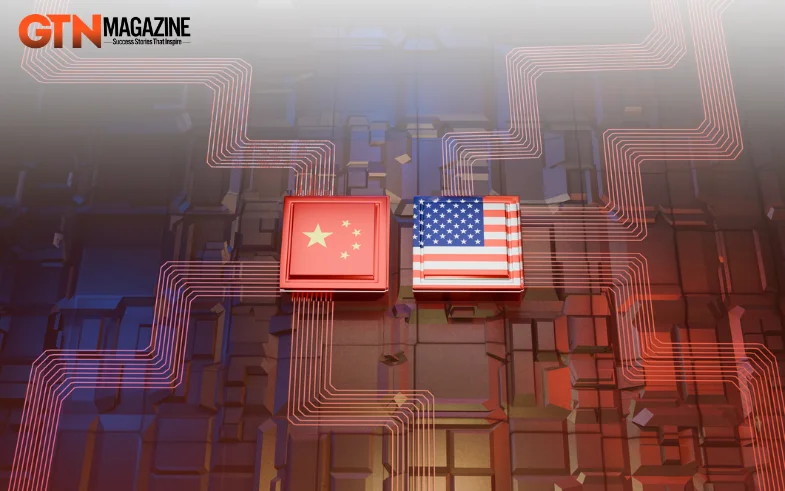

As AI research progressed, the technology began to move out of the lab and into the real world. In the 1990s and early 2000s, AI started to be used in a wide range of applications, from healthcare and finance to entertainment and transportation.

One of the most famous examples of AI in the 1990s was IBM's Deep Blue, a chess-playing computer that defeated world champion Garry Kasparov in 1997. Deep Blue used a combination of rule-based AI and machine learning to analyse millions of possible moves and select the best one. This victory was a major milestone for AI, demonstrating that machines could outperform humans in complex tasks.

Around the same time, AI began to be used in other areas, such as fraud detection in banking, medical diagnosis, and customer service. In healthcare, AI was used to analyse medical images and assist doctors in diagnosing diseases. In finance, AI helped banks detect fraudulent transactions by analysing patterns in transaction data. In customer service, AI-powered chatbots like ELIZA's successors were used to handle customer inquiries and provide support.

As AI became more capable, it also became more accessible. Companies like Google, Amazon, and Microsoft began offering AI services through their cloud platforms, allowing businesses of all sizes to integrate AI into their products and services. This democratization of AI has led to a rapid expansion of the technology into new areas.

AI in Our Daily Lives

AI at Home

Today, AI is a part of our everyday lives, often in ways we may not even realise. One of the most common uses of AI is in virtual assistants like Apple's Siri, Amazon's Alexa, and Google Assistant. These AI-powered assistants can perform tasks like setting reminders, playing music, answering questions, and controlling smart home devices. They use natural language processing (NLP) to understand and respond to human speech, making them easy to interact with.

You may also like:-